# Ubbic.app: A Smarter Rental Search Engine for Cordoba

Table of Contents

Summary

A rental property search engine that scrapes the most popular real estate portals in Cordoba that provides great search features, like neighborhood search, advanced filters, and custom map area searching.

Where to try it

Tech Stack

For those interested in the technical details, here’s what the project is built with:

- Backend: FastAPI (Python) for the API.

- Frontend: React with TypeScript and Vite, styled with Tailwind CSS.

- Database: PostgreSQL with the PostGIS extension for geospatial queries.

- Data Scraping: Scrapy (Python).

- ORM: SQLModel for database interactions.

- Map Interface: React-Leaflet with Leaflet-Draw.

Technical Challenges

Building this project involved solving a few interesting problems:

-

Bypassing Bot Protection: Modern websites are good at blocking scrapers. To get around this, I had to use a pool of rotating proxies and browser impersonation tools to make the scraper look like a real user.

-

Efficient Map Queries: The “draw on map” feature was tricky. A simple database query to check if a point is inside a shape is very slow with thousands of properties. The proper solution was to use a real geospatial database. I used PostgreSQL with the PostGIS extension, which provides a function (

ST_Contains) that can perform these queries almost instantly. -

Data Quality: The biggest headache was (and still is) handling the poor quality of the data. Agents often upload listings with missing coordinates, incorrect addresses (like ‘Av. Colón 100’ vs ‘Colon 100’), or placeholder prices. I had to write several data cleaning pipelines and the separate Geocoder spider just to clean up this mess.

How it works

There are two main parts to the app:

Web scraper

The web scraper is one of the most fundamental parts of the app. It coordinates several spiders that crawl the main rental portals and store their results in a database.

It uses proxies and browser fingerprinting spoofing to bypass bot protections.

It also has middlewares that prevent recently seen listings from being scraped again, thus saving on resources and time.

It also has several pipelines that transform the collected data into a specific format to be stored in the database. This is necessary because different portals provide data in slightly different formats.

Geocoder

The scraper also includes a geocoder script, that handles assigning coordinates to properties where the real estate agent hasn’t provided coordinates.

Web app

The web application uses the information obtained by the web scraper and displays it for users to see. It also provides several tools to filter this information.

Search methods

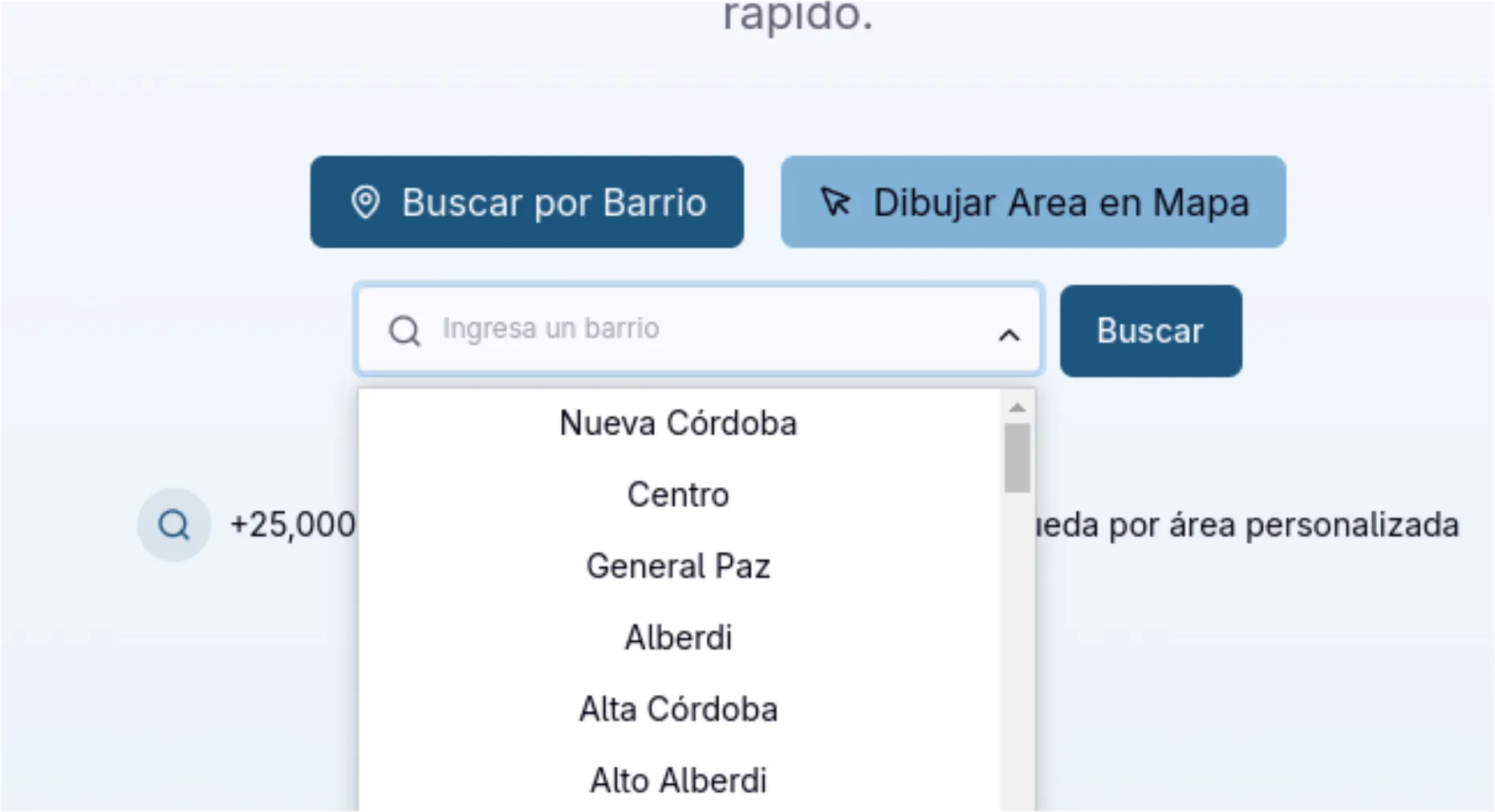

There are two search methods on the website:

- Search by neighborhood, where you can select multiple neighborhoods you are interested in

- Search by area, where you can draw multiple shapes on the map, and you’ll only get results for properties inside those shapes.

Filters

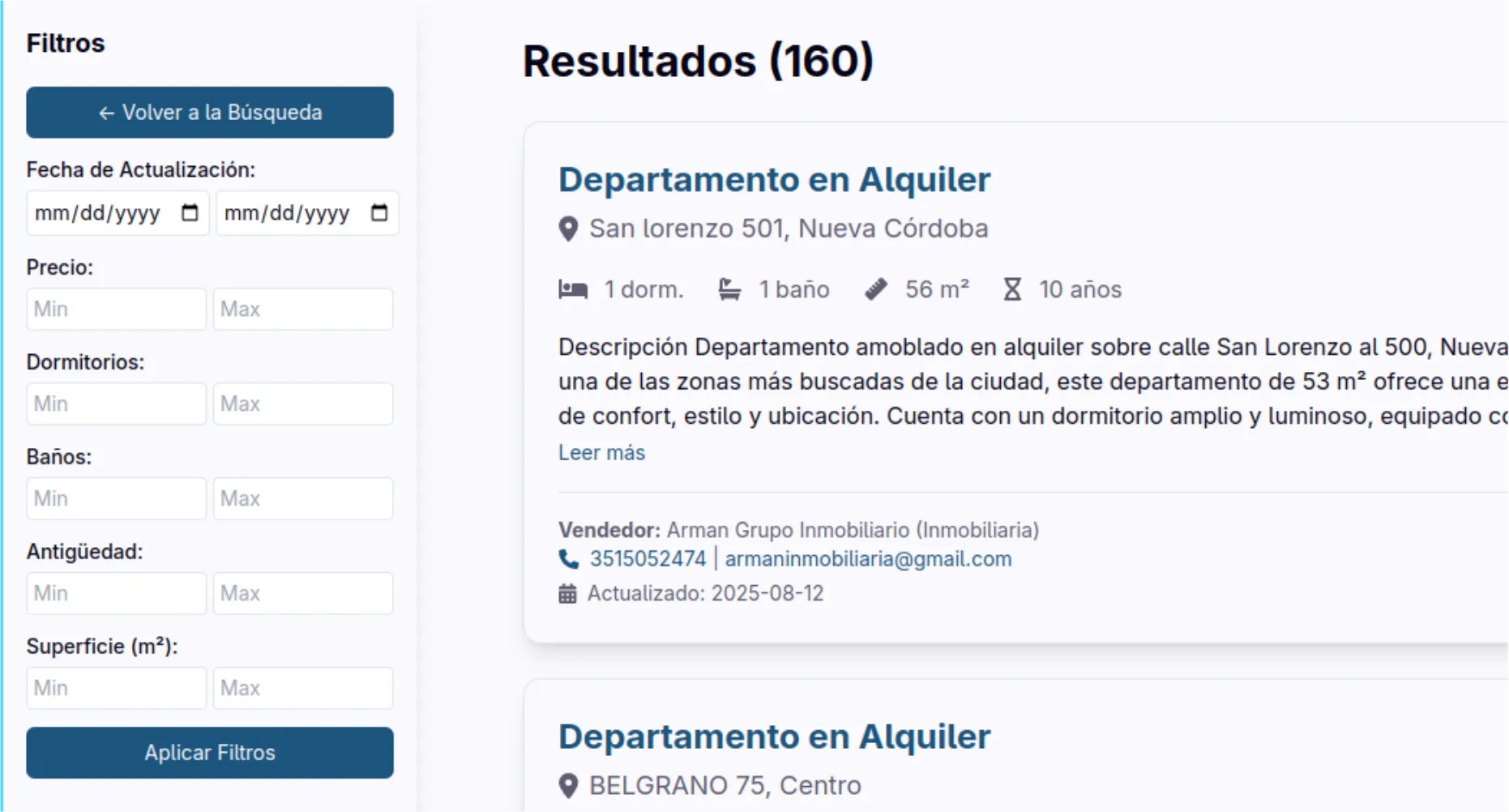

After performing the initial search, the user can further refine it by choosing a range for the following values:

- Update date

- Price

- Bedrooms

- Bathrooms

- Property age

- Property surface

History

On 2021 I lived in the north of Argentina, in a province called ‘Misiones’. That year is when I decided I would move to the city of Cordoba (1,144 km away).

This meant I was going to need someplace to live.

I tried the main rental portals in Cordoba, but I found a few issues:

- The filters were pretty basic

- For example, I couldn’t search for more than one neighborhood at a time

- They wouldn’t let me export the data to a spreadsheet

- I couldn’t filter by the exact neighborhoods and areas on the map I wanted

- I couldn’t search all portals from a single place.

Given this, I decided to take the bull by the horns and create a web scraper to help me find my dream apartment, which I eventually did.

Some time passed after moving to Cordoba, and by this point several of my friends had asked me to run my scraper to help them find apartments.

That’s what inspired me to create a full-stack app, so they can actually perform searches themselves, without having to depend on me.

This is the point where it stopped being just a script on my computer and became a fully-fledged web app, with a database, a frontend and a backend.

Goals

This web app doesn’t try in any way to replace the traditional rental portals, which hold all the traffic, but instead aims to complement them, by providing the user a way to search for apartments in all portals at the same time.

Project Scope & Limitations

As this is just a personal project, the scope is reduced.

- Instead of scraping apartments for all Argentina, this only focuses on Cordoba.

- It only focuses on apartments, not on for-sale properties.

- There are also some performance limitations related to using free tiers from cloud services to host the website.

Future Plans

The next big feature I’m planning to build is a notification system. This would let users save a search (either a neighborhood or a drawn area) and get an email alert when a new property that matches their criteria is scraped.